Detailed explaination of AdaBoost

Boosting is one of the most powerful ideas introduced in the last twenty years. It was originally designed for classification problems but can be extended to regression problems as well. It is a method where multiple models are created using a logic and then combined together to get a prediction which is better than what we could have got using a single strong model.

With respect to the idea of combining multiple models together it is similar to bagging but the way in which boosting comes up with multiple models is totally different from the way things are done in bagging.

To understand the concept of boosting intuitively, we will consider one of the earliest and important boosting algorithm called as “AdaBoost.M1” or “Discrete AdaBoost”. To understand this algorithm we set a context where our response variable has two classes . Note that by making a few changes, AdaBoost can be extended to a continuous response or a multiclass response as well but for now we will stick to the response.

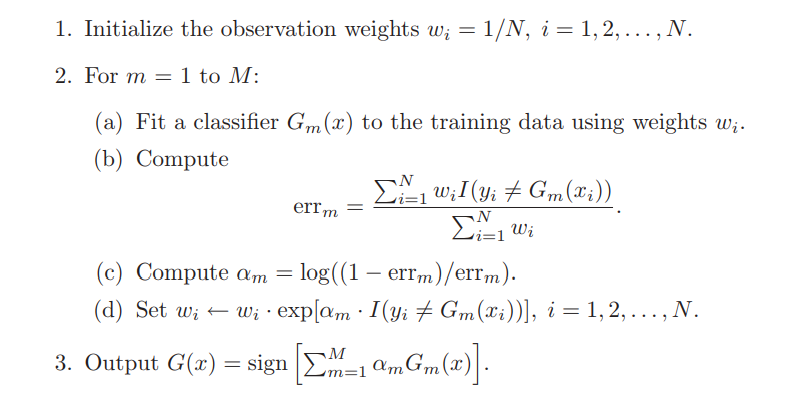

Following is the pseudo code of AdaBoost.M1 algorithm. We will disect and look at each and every step in detail after that.

To simplify things a bit, the algorithm:

- Takes in the following parameters: Total number of iterations/runs of a classifier (Let us stick to decision trees for now so decision trees) & a training data of training samples.

- Starts off with weighting equally, each of the training example by weight

- Comes up with the first decision tree that minimizes a weighted error function given by

- Calculates an update parameter for the first tree using and more generally at each is calculated using

- updates the weights (i.e. we do what we were doing in Step 2) and the process continues by executing Step 3, 4 and 5.

The above mechanism raises two important questions. Why are the weights assigned to the training examples? And What is the use of ??

The answers to these two questions are the key to understanding how and why boosting works.

1. Why assign weights to training examples?

Lets look at the first question about the weights. At first, the training samples are weighted, then a tree is fit using the weighted error function. After that, while fitting subsequent trees does the job of reducing/not increasing the weights of those training examples which were correctly classified by the current tree and increasing the weights of those which were misclassified. (Do not worry if you don’t understand how updates the weights. We will look at that shortly but just keep in mind at a high level what does to the weights according to correct classification)

To illustrate why this weight updation works: Once is fit, we will have which will update the weights for accordingly. Now consider the fitting of the next tree . We know that decision tree algorithms choose that feature and that value of the feature which minimizes the error at each split. Our error function is . Note the indicator variable . Since we have this indicator in the error function, we can see that the decision tree will try to pick the features for the splits which correctly classify the highly weighted observations (misclassified by the previous tree) since if these observations were misclassified again then the indicator variable will take the value of and the high weight will be considered in the evaluation of error. So the decision tree will be forced to prioritize the previously misclassified examples because if it does not, then the weighted error would cease to come down and converge to a minima. Its like manipulating an algorithm to focus on previous misclassified/ highly weighted examples by including the weights in the loss function. This is why weights are assigned to each training example and are accomodated in the error.

So higher the weight of a particular training sample, more the chance of the algorithm finding the right criteria to correctly classify it.

2. What is the use of ?

The second important question is, how does increase the weights of training example misclassified by a classifier and decrease the weights of correctly classified training examples? Let us look at this by looking at a few cases.

Case 1

Error of is high

This means is high. Lets assume error is 0.9. Then

As we can see turned out to be low. is inversely proportional to

Now let us consider weight updation for the sub-cases of correctly-classified/mis-classified training examples. The formula for weight updation for any training example is .

Case 1.A

Weights of Examples correctly-classified by

For rightly classified examples, the indicator variable is zero. This implies the exponent term in the weight updation becomes 1 (). This implies updated weight i.e. no change.

Case 1.B

Weights of Examples mis-classified by

For mis-classified examples, the indicator variable is 1. This implies the exponent term in the weight updation becomes . And therefore,

Hence, when error is high, alpha is low and the weights of mis-classified samples are increased for the next iteration of the classifier which means that next classifier will be forced to prioritize the classification of the mis-classified samples.

This is how the combination Error, Alpha and Weights is used to create iteratively better learners.

Case 2

Error of is low

This means is low. Lets assume error is 0.1. Then

As we can see turned out to be high. is inversely proportional to

Now let us consider weight updation for the sub-cases of correctly-classified/mis-classified training examples. The formula for weight updation for any training example is .

Case 2.A

Weights of Examples correctly-classified by

For rightly classified examples, the indicator variable is zero. This implies the exponent term in the weight updation becomes 1 (). This implies updated weight i.e. no change.

Case 2.B

Weights of Examples mis-classified by

For mis-classified examples, the indicator variable is 1. This implies the exponent term in the weight updation becomes . And therefore,

Summary

So this is how the Boosting works in essence. Examples are weighted according to their correct classification and weights are updated at each iteration.